New Updated DP-200 Exam Questions from PassLeader DP-200 PDF dumps! Welcome to download the newest PassLeader DP-200 VCE dumps: https://www.passleader.com/dp-200.html (60 Q&As)

Keywords: DP-200 exam dumps, DP-200 exam questions, DP-200 VCE dumps, DP-200 PDF dumps, DP-200 practice tests, DP-200 study guide, DP-200 braindumps, Implementing an Azure Data Solution Exam

P.S. New DP-200 dumps PDF: https://drive.google.com/open?id=1CTHwJ44u5lT4tsb2qo8oThaQ5c_vwun1

P.S. New DP-100 dumps PDF: https://drive.google.com/open?id=1f70QWrCCtvNby8oY6BYvrMS16IXuRiR2

P.S. New DP-201 dumps PDF: https://drive.google.com/open?id=1VdzP5HksyU93Arqn65qPe5UFEm2Sxooh

NEW QUESTION 1

A company has a SaaS solution that uses Azure SQL Database with elastic pools. The solution contains a dedicated database for each customer organization. Customer organizations have peak usage at different periods during the year. You need to implement the Azure SQL Database elastic pool to minimize cost. Which option or options should you configure?

A. Number of transactions only

B. eDTUs per database only

C. Number of databases only

D. CPU usage only

E. eDTUs and max data size

Answer: E

Explanation:

The best size for a pool depends on the aggregate resources needed for all databases in the pool. This involves determining the following:

– Maximum resources utilized by all databases in the pool (either maximum DTUs or maximum vCores depending on your choice of resourcing model).

– Maximum storage bytes utilized by all databases in the pool.

Note: Elastic pools enable the developer to purchase resources for a pool shared by multiple databases to accommodate unpredictable periods of usage by individual databases. You can configure resources for the pool based either on the DTU-based purchasing model or the vCore-based purchasing model.

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-elastic-pool

NEW QUESTION 2

A company manages several on-premises Microsoft SQL Server databases. You need to migrate the databases to Microsoft Azure by using a backup and restore process. Which data technology should you use?

A. Azure SQL Database single database

B. Azure SQL Data Warehouse

C. Azure Cosmos DB

D. Azure SQL Database Managed Instance

Answer: D

Explanation:

Managed instance is a new deployment option of Azure SQL Database, providing near 100% compatibility with the latest SQL Server on-premises (Enterprise Edition) Database Engine, providing a native virtual network (VNet) implementation that addresses common security concerns, and a business model favorable for on-premises SQL Server customers. The managed instance deployment model allows existing SQL Server customers to lift and shift their on-premises applications to the cloud with minimal application and database changes.

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-managed-instance

NEW QUESTION 3

A company is designing a hybrid solution to synchronize data and on-premises Microsoft SQL Server database to Azure SQL Database. You must perform an assessment of databases to determine whether data will move without compatibility issues. You need to perform the assessment. Which tool should you use?

A. SQL Server Migration Assistant (SSMA)

B. Microsoft Assessment and Planning Toolkit

C. SQL Vulnerability Assessment (VA)

D. Azure SQL Data Sync

E. Data Migration Assistant (DMA)

Answer: E

Explanation:

The Data Migration Assistant (DMA) helps you upgrade to a modern data platform by detecting compatibility issues that can impact database functionality in your new version of SQL Server or Azure SQL Database. DMA recommends performance and reliability improvements for your target environment and allows you to move your schema, data, and uncontained objects from your source server to your target server.

https://docs.microsoft.com/en-us/sql/dma/dma-overview

NEW QUESTION 4

You develop data engineering solutions for a company. A project requires the deployment of resources to Microsoft Azure for batch data processing on Azure HDInsight. Batch processing will run daily and must:

– Scale to minimize costs

– Be monitored for cluster performance

You need to recommend a tool that will monitor clusters and provide information to suggest how to scale.

Solution: Monitor cluster load using the Ambari Web UI.

Does the solution meet the goal?

A. Yes

B. No

Answer: B

Explanation:

Ambari Web UI does not provide information to suggest how to scale. Instead monitor clusters by using Azure Log Analytics and HDInsight cluster management solutions.

https://docs.microsoft.com/en-us/azure/hdinsight/hdinsight-hadoop-oms-log-analytics-tutorial

https://docs.microsoft.com/en-us/azure/hdinsight/hdinsight-hadoop-manage-ambari

NEW QUESTION 5

You develop data engineering solutions for a company. A project requires the deployment of resources to Microsoft Azure for batch data processing on Azure HDInsight. Batch processing will run daily and must:

– Scale to minimize costs

– Be monitored for cluster performance

You need to recommend a tool that will monitor clusters and provide information to suggest how to scale.

Solution: Monitor clusters by using Azure Log Analytics and HDInsight cluster management solutions.

Does the solution meet the goal?

A. Yes

B. No

Answer: A

Explanation:

HDInsight provides cluster-specific management solutions that you can add for Azure Monitor logs. Management solutions add functionality to Azure Monitor logs, providing additional data and analysis tools. These solutions collect important performance metrics from your HDInsight clusters and provide the tools to search the metrics. These solutions also provide visualizations and dashboards for most cluster types supported in HDInsight. By using the metrics that you collect with the solution, you can create custom monitoring rules and alerts.

https://docs.microsoft.com/en-us/azure/hdinsight/hdinsight-hadoop-oms-log-analytics-tutorial

NEW QUESTION 6

A company plans to use Azure Storage for file storage purposes. Compliance rules require:

– A single storage account to store all operations including reads, writes and deletes.

– Retention of an on-premises copy of historical operations.

You need to configure the storage account. Which two actions should you perform? (Each correct answer presents part of the solution. Choose two.)

A. Configure the storage account to log read, write and delete operations for ServiceType Blob.

B. Use the AzCopy tool to download log data from $logs/blob.

C. Configure the storage account to log read, write and delete operations for ServiceType Table.

D. Use the storage client to download log data from $logs/table.

E. Configure the storage account to log read, write and delete operations for ServiceType Queue.

Answer: AB

Explanation:

Storage Logging logs request data in a set of blobs in a blob container named $logs in your storage account. This container does not show up if you list all the blob containers in your account but you can see its contents if you access it directly. To view and analyze your log data, you should download the blobs that contain the log data you are interested in to a local machine. Many storage-browsing tools enable you to download blobs from your storage account; you can also use the Azure Storage team provided command-line Azure Copy Tool (AzCopy) to download your log data.

https://docs.microsoft.com/en-us/rest/api/storageservices/enabling-storage-logging-and-accessing-log-data

NEW QUESTION 7

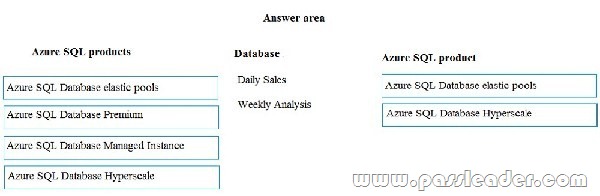

Drag and Drop

You are developing the data platform for a global retail company. The company operates during normal working hours in each region. The analytical database is used once a week for building sales projections. Each region maintains its own private virtual network. Building the sales projections is very resource intensive are generates upwards of 20 terabytes (TB) of data. Microsoft Azure SQL Databases must be provisioned.

– Database provisioning must maximize performance and minimize cost.

– The daily sales for each region must be stored in an Azure SQL Database instance.

– Once a day, the data for all regions must be loaded in an analytical Azure SQL Database instance.

You need to provision Azure SQL database instances. How should you provision the database instances? (To answer, drag the appropriate Azure SQL products to the correct databases. Each Azure SQL product may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.)

Answer:

Explanation:

Box 1: Azure SQL Database elastic pools. SQL Database elastic pools are a simple, cost-effective solution for managing and scaling multiple databases that have varying and unpredictable usage demands. The databases in an elastic pool are on a single Azure SQL Database server and share a set number of resources at a set price. Elastic pools in Azure SQL Database enable SaaS developers to optimize the price performance for a group of databases within a prescribed budget while delivering performance elasticity for each database.

Box 2: Azure SQL Database Hyperscale. A Hyperscale database is an Azure SQL database in the Hyperscale service tier that is backed by the Hyperscale scale-out storage technology. A Hyperscale database supports up to 100 TB of data and provides high throughput and performance, as well as rapid scaling to adapt to the workload requirements. Scaling is transparent to the application – connectivity, query processing, and so on, work like any other SQL database.

Incorrect:

Azure SQL Database Managed Instance: The managed instance deployment model is designed for customers looking to migrate a large number of apps from on- premises or IaaS, self-built, or ISV provided environment to fully managed PaaS cloud environment, with as low migration effort as possible.

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-elastic-pool

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-service-tier-hyperscale-faq

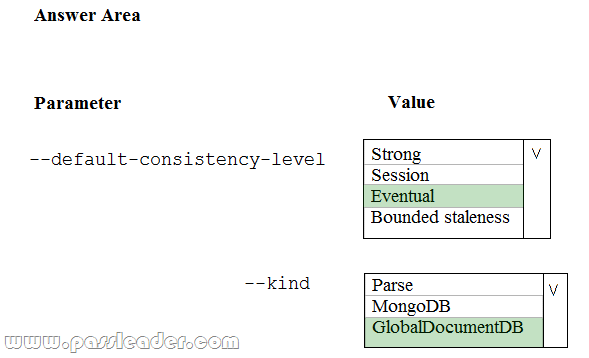

NEW QUESTION 8

HotSpot

A company is planning to use Microsoft Azure Cosmos DB as the data store for an application. You have the following Azure CLI command:

az cosmosdb create –name “cosmosdbdev1” –resource-group “rgdev”

You need to minimize latency and expose the SQL API. How should you complete the command? (To answer, select the appropriate options in the answer area.)

Answer:

Explanation:

Box 1: Eventual. With Azure Cosmos DB, developers can choose from five well-defined consistency models on the consistency spectrum. From strongest to more relaxed, the models include strong, bounded staleness, session, consistent prefix, and eventual consistency.

Box 2: GlobalDocumentDB. Select Core(SQL) to create a document database and query by using SQL syntax.

Note: The API determines the type of account to create. Azure Cosmos DB provides five APIs: Core(SQL) and MongoDB for document databases, Gremlin for graph databases, Azure Table, and Cassandra.

https://docs.microsoft.com/en-us/azure/cosmos-db/consistency-levels

https://docs.microsoft.com/en-us/azure/cosmos-db/create-sql-api-dotnet

NEW QUESTION 9

You plan to use Microsoft Azure SQL Database instances with strict user access control. A user object must:

– Move with the database if it is run elsewhere

– Be able to create additional users

You need to create the user object with correct permissions. Which two Transact-SQL commands should you run? (Each correct answer presents part of the solution. Choose two.)

A. ALTER LOGIN Mary WITH PASSWORD = ‘strong_password’;

B. CREATE LOGIN Mary WITH PASSWORD = ‘strong_password’;

C. ALTER ROLE db_owner ADD MEMBER Mary;

D. CREATE USER Mary WITH PASSWORD = ‘strong_password’;

E. GRANT ALTER ANY USER TO Mary;

Answer: CD

Explanation:

C: ALTER ROLE adds or removes members to or from a database role, or changes the name of a user-defined database role. Members of the db_owner fixed database role can perform all configuration and maintenance activities on the database, and can also drop the database in SQL Server.

D: CREATE USER adds a user to the current database.

Note: Logins are created at the server level, while users are created at the database level. In other words, a login allows you to connect to the SQL Server service (also called an instance), and permissions inside the database are granted to the database users, not the logins. The logins will be assigned to server roles (for example, serveradmin) and the database users will be assigned to roles within that database (eg. db_datareader, db_bckupoperator).

https://docs.microsoft.com/en-us/sql/t-sql/statements/alter-role-transact-sql

https://docs.microsoft.com/en-us/sql/t-sql/statements/create-user-transact-sql

NEW QUESTION 10

You develop data engineering solutions for a company. You need to ingest and visualize real-time Twitter data by using Microsoft Azure. Which three technologies should you use? (Each correct answer presents part of the solution. Choose three.)

A. Event Grid topic.

B. Azure Stream Analytics Job that queries Twitter data from an Event Hub.

C. Azure Stream Analytics Job that queries Twitter data from an Event Grid.

D. Logic App that sends Twitter posts which have target keywords to Azure.

E. Event Grid subscription.

F. Event Hub instance.

Answer: BDF

Explanation:

You can use Azure Logic apps to send tweets to an event hub and then use a Stream Analytics job to read from event hub and send them to PowerBI.

https://community.powerbi.com/t5/Integrations-with-Files-and/Twitter-streaming-analytics-step-by-step/td-p/9594

NEW QUESTION 11

……

Case Study 1 – Proseware, Inc.

Proseware, Inc. develops and manages a product named Poll Taker. The product is used for delivering public opinion polling and analysis. Polling data comes from a variety of sources, including online surveys, house-to-house interviews, and booths at public events.

……

NEW QUESTION 51

You need to ensure that phone-based poling data can be analyzed in the PollingData database. How should you configure Azure Data Factory?

A. Use a tumbling schedule trigger.

B. Use an event-based trigger.

C. Use a schedule trigger.

D. Use manual execution.

Answer: C

Explanation:

When creating a schedule trigger, you specify a schedule (start date, recurrence, end date etc.) for the trigger, and associate with a Data Factory pipeline.

https://docs.microsoft.com/en-us/azure/data-factory/how-to-create-schedule-trigger

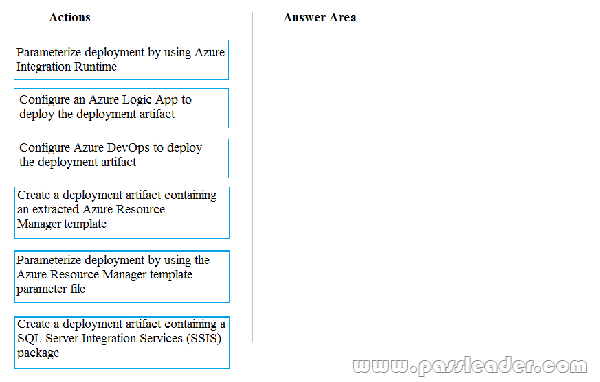

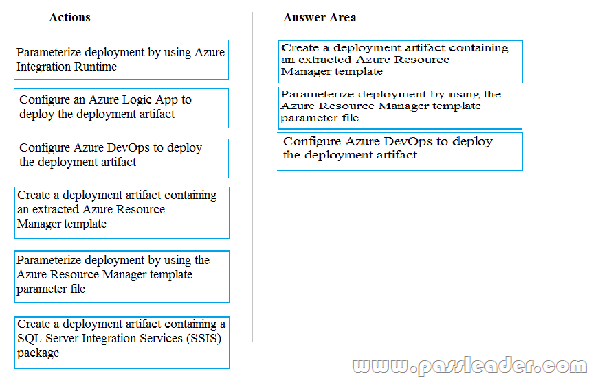

NEW QUESTION 52

Drag and Drop

You need to ensure that phone-based polling data can be analyzed in the PollingData database. Which three actions should you perform in sequence? (To answer, move the appropriate actions from the list of actions to the answer are and arrange them in the correct order.)

Answer:

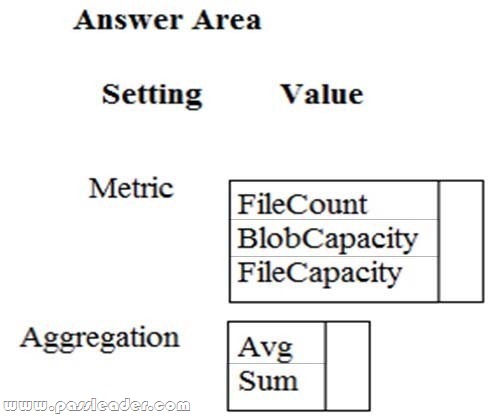

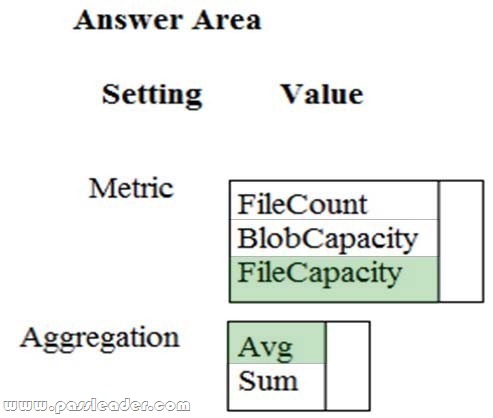

NEW QUESTION 53

HotSpot

You need to ensure phone-based polling data upload reliability requirements are met. How should you configure monitoring? (To answer, select the appropriate options in the answer area.)

Answer:

NEW QUESTION 54

……

Case Study 2 – Contoso

Contoso relies on an extensive partner network for marketing, sales, and distribution. Contoso uses external companies that manufacture everything from the actual pharmaceutical to the packaging. The majority of the company’s data reside in Microsoft SQL Server database. Application databases fall into one of the following tiers:

……

NEW QUESTION 57

You need to process and query ingested Tier 9 data. Which two options should you use? (Each correct answer presents part of the solution. Choose two.)

A. Azure Notification Hub

B. Transact-SQL statements

C. Azure Cache for Redis

D. Apache Kafka statements

E. Azure Event Grid

F. Azure Stream Analytics

Answer: EF

Explanation:

Event Hubs provides a Kafka endpoint that can be used by your existing Kafka based applications as an alternative to running your own Kafka cluster. You can stream data into Kafka-enabled Event Hubs and process it with Azure Stream Analytics, in the following steps:

– Create a Kafka enabled Event Hubs namespace.

– Create a Kafka client that sends messages to the event hub.

– Create a Stream Analytics job that copies data from the event hub into an Azure blob storage.

https://docs.microsoft.com/en-us/azure/event-hubs/event-hubs-kafka-stream-analytics

NEW QUESTION 58

You need to set up Azure Data Factory pipelines to meet data movement requirements. Which integration runtime should you use?

A. Self-hosted Integration Runtime

B. Azure-SSIS Integration Runtime

C. .NET Common Language Runtime (CLR)

D. Azure Integration Runtime

Answer: A

Explanation:

The following table describes the capabilities and network support for each of the integration runtime types:

https://docs.microsoft.com/en-us/azure/data-factory/concepts-integration-runtime

NEW QUESTION 59

You need to implement diagnostic logging for Data Warehouse monitoring. Which log should you use?

A. RequestSteps

B. DmsWorkers

C. SqlRequests

D. ExecRequests

Answer: C

Explanation:

https://docs.microsoft.com/en-us/sql/relational-databases/system-dynamic-management-views/sys-dm-pdw-sql-requests-transact-sql

NEW QUESTION 60

……

Download the newest PassLeader DP-200 dumps from passleader.com now! 100% Pass Guarantee!

DP-200 PDF dumps & DP-200 VCE dumps: https://www.passleader.com/dp-200.html (60 Q&As) (New Questions Are 100% Available and Wrong Answers Have Been Corrected! Free VCE simulator!)

P.S. New DP-200 dumps PDF: https://drive.google.com/open?id=1CTHwJ44u5lT4tsb2qo8oThaQ5c_vwun1

P.S. New DP-100 dumps PDF: https://drive.google.com/open?id=1f70QWrCCtvNby8oY6BYvrMS16IXuRiR2

P.S. New DP-201 dumps PDF: https://drive.google.com/open?id=1VdzP5HksyU93Arqn65qPe5UFEm2Sxooh